Join Us on Apr 30: Unveiling Parasoft C/C++test CT for Continuous Testing & Compliance Excellence | Register Now

Products

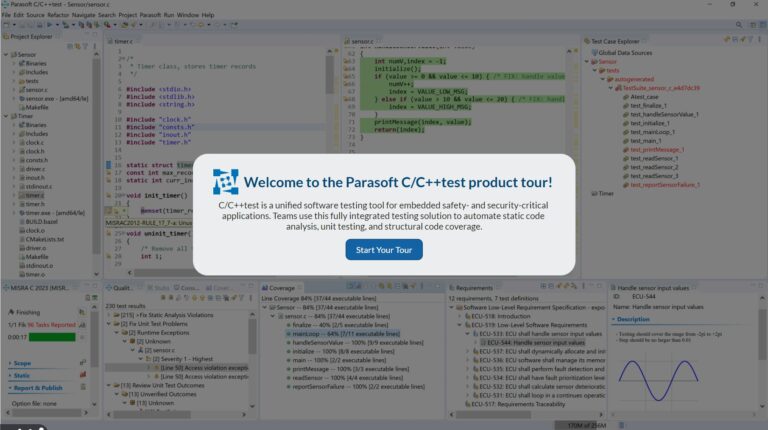

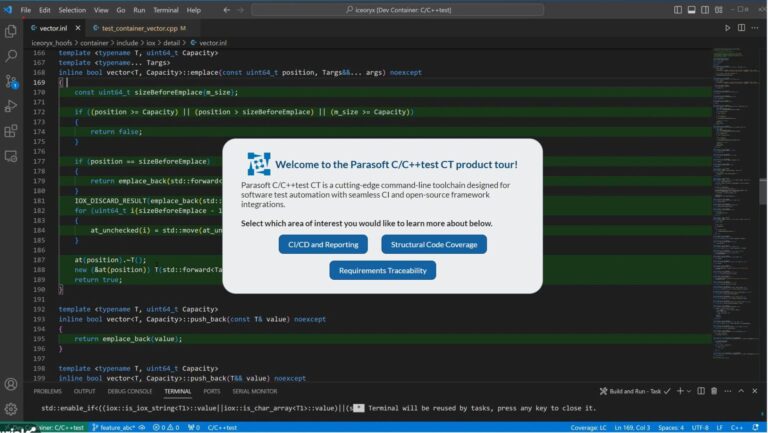

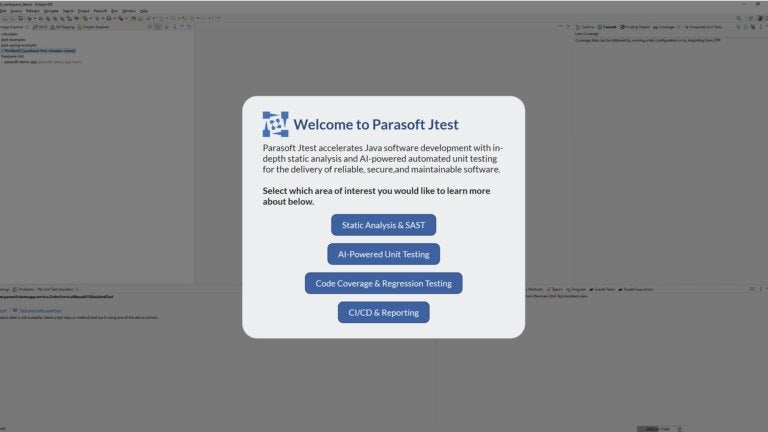

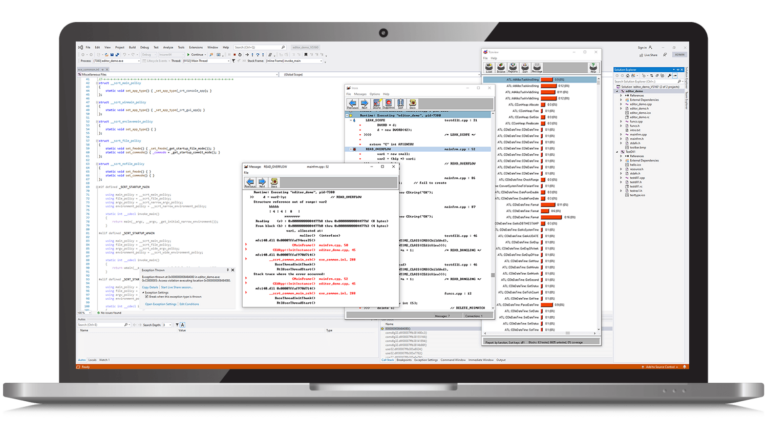

Innovative and Intelligent Software Testing Platform

Enable continuous quality, deliver at speed, and comply with industry standards with automated software testing tools that find and fix defects along every stage of the SDLC.

Benefits of Parasoft's Automated Solutions

Built from the ground up, our automated software testing solutions set the standard for innovation and seamlessly integrate with your product portfolio.

Work Smarter With AI

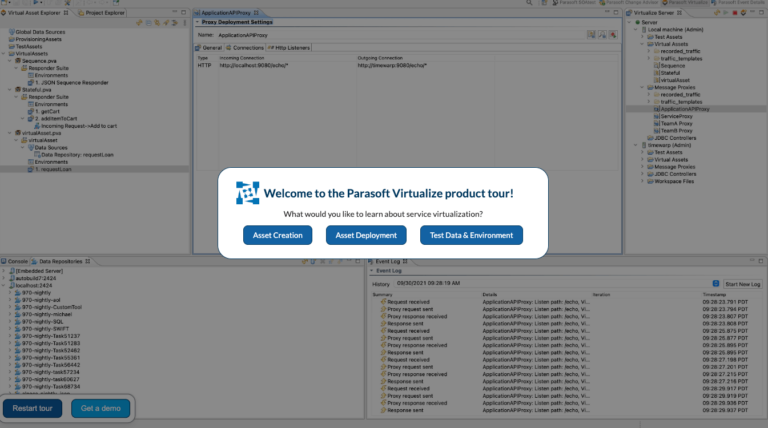

Find and fix defects faster with our AI-powered static analysis, unit testing, API testing, optimization of test execution, and more.

Test Early, Test Continuously

Prevent, detect, and fix defects early in the software development life cycle and integrate quality at every stage along the way.

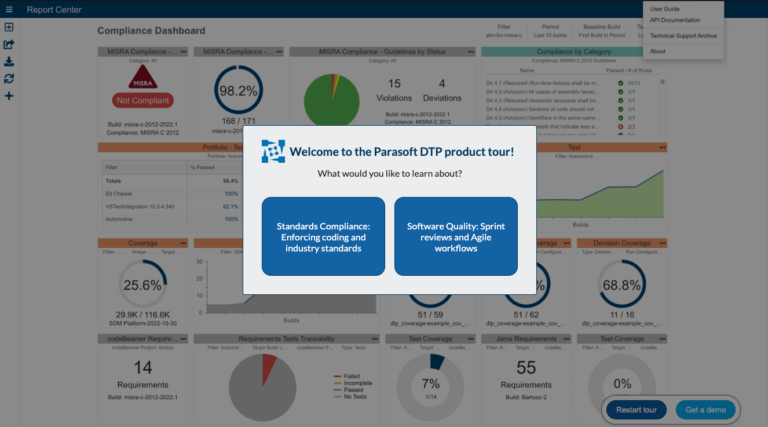

Automate Compliance

Reduce the cost and risk of achieving compliance with safety, reliability, and security standards by automating testing and verification.

Helping Customers Achieve & Succeed

Accelerate and deliver high-quality software with confidence, just like other companies that choose Parasoft.

10X Faster Delivery

Reduced test execution time with automation

Higher Quality

Met coverage requirements with AI-generated unit testing

Streamlined Compliance

Achieved compliance with safety & security standards (AUTOSAR, CERT, & more)

Lower Risk

Reduced late cycle, high-impact defects

Filter by:

Why Customers Love Our Automated Solutions

“Parasoft C/C++test includes all of the tooling needed to build safe and reliable software. The best feature is the integration of unit-testing alongside static analysis and compliance checking. If you are building any safety or security-critical software, Parasoft C/C++test is a no-brainer.”

Mark G., Software Engineering Group Lead

“Easy to setup. Awesome community. Longer shelf life. Support team was always available for all of our silly questions. The reports generated was very descriptive and useful at the organization level for reviews and quality standard review. Even the performance metrics which we captured was time saving and accurate.”

Telecommunications Industry

Not sure where to start? Talk with an expert.